Experiencing noise protection measures in urban living spaces

The NoiseProtect demonstrator, which was developed by Fraunhofer experts based on Machine Learning methods, offers residents, urban planners and experts in acoustics and noise control the opportunity to gain an acoustic impression of how traffic, industrial or construction site noise spreads in urban living spaces and how noise could be effectively reduced. The technology thus provides a decision-making aid before the detailed planning and cost-intensive implementation of noise protection measures.

Intelligent software supports the implementation of noise action plans

The cost-intensive planning, which is usually done graphically with the help of numerical simulations, and implementation of noise protection measures is further simplified by the implemented web-based auralization tool. Residents and urban planners are informed about noise reduction potentials in a particularly comprehensible and user-centered way. The software tool can thus support the implementation of individual noise action plans in urban and industrial environments as well as in the mobility sector.

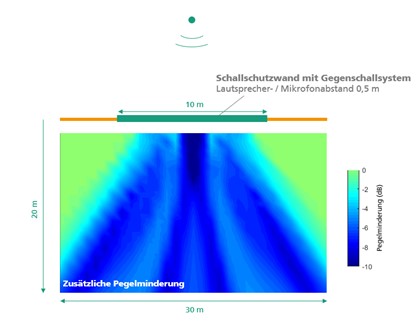

In this application, the acoustic situation of living spaces is automatically analyzed and prepared for an object-based auralization based on an AI-supported segmentation of satellite images. In addition to a noise source and a listening position, the position of a noise protection measure can be defined in the software tool. The auralization is calculated and provided for three states: initial noise situation (1), noise situation considering a passive noise barrier (2) and an active noise barrier with active noise control (3).

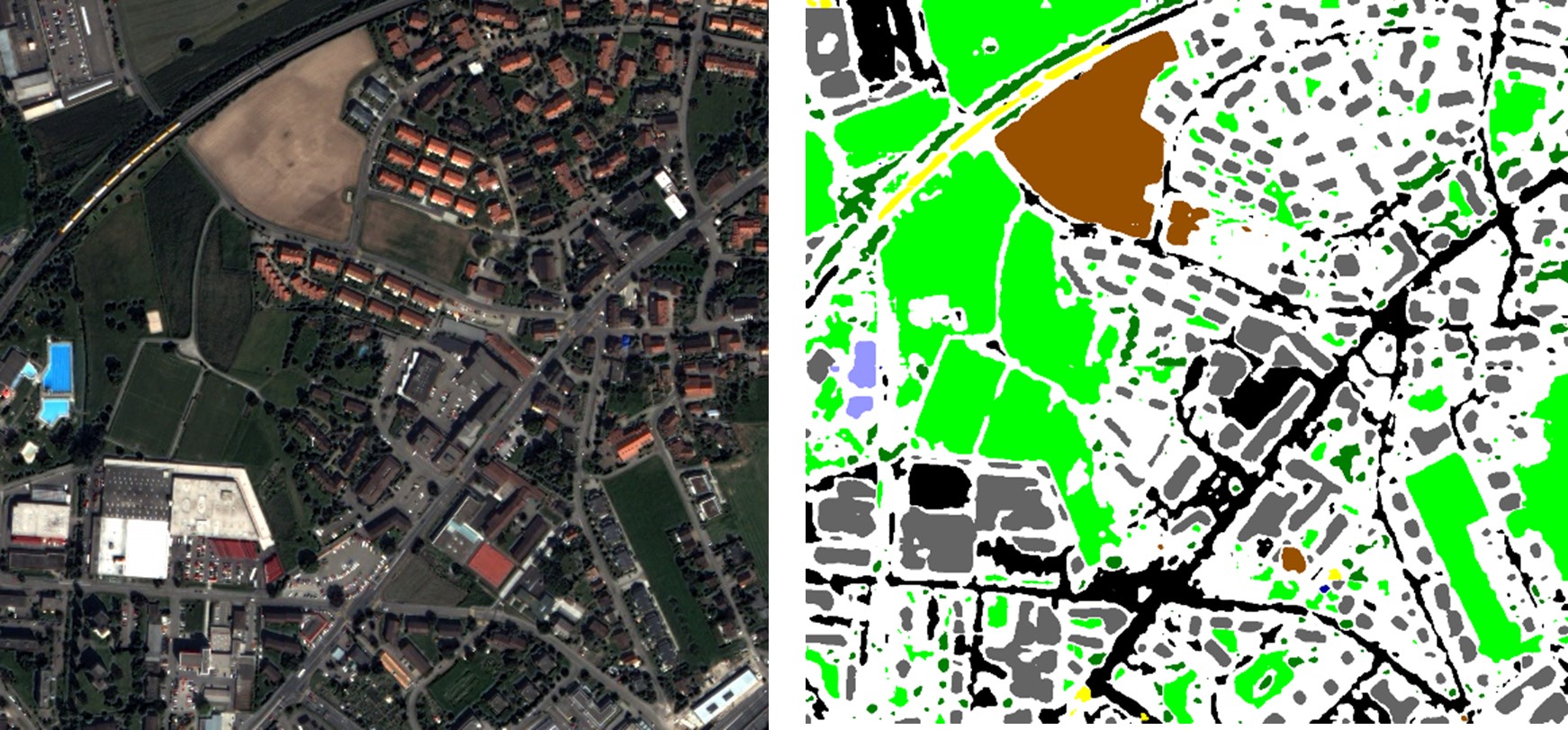

ML offers a pixel-precise segmentation and classification of images to analyze the surroundings

To ensure auralization as close to reality as possible, the immediate surroundings must be known as precisely as possible. Pixel-precise segmentation of satellite images offers an obvious opportunity here. Artificial Intelligence (AI) has completely transformed the fields of Machine Learning (ML) and computer vision in recent years. A common use of automatic image processing is the pixel-precise segmentation and classification of various objects in images – whether in medical image processing, real-time analysis of image data, for example in autonomous driving, or satellite image analysis.

Often the so-called U-Net architecture, a Deep Learning based neural network, is used for automatic segmentation of objects. Within this project, a U-Net was trained for the automatic segmentation of satellite images. Eight different classes are distinguished: road, trees, field, railroad track, building, grass, water body and swimming pool. The publicly available "Eye-In-The-Sky" data set from DigitalGlobe, Inc. was used for the training. Figure 3 shows an example of a satellite image and the associated automatically generated segmentation.

For auralization, the software tool uses the JavaScript library p5.sound to linearly convolve the sound source signals with calculated impulse responses of the different noise reduction measures. Audio signal processing algorithms based on wave field synthesis and explicit regression methods based on finite-length acoustic impulse responses are used to calculate the impulse responses. The effect of passive noise barriers is simulated based on sound immission models of the Federal Immission Control Act BImSchG.

NoiseProtect can simplify the planning of cost-intensive protection measures

The virtual AI demonstrator NoiseProtect makes noise protection measures in urban living spaces audible and thus understandable. In the future, the software tool can simplify the planning of cost-intensive noise protection measures and reduce planning costs through the target-specific selection of noise protection measures.

Further research is required to extend the simulation to include further acoustic effects such as reflections, diffraction, air absorption and additional sound sources in the environment. Further development of the software tool is planned for application-specific questions in the context of the implementation of concrete noise action plans and noise abatement programs.

Research Center Machine Learning

Research Center Machine Learning